Let me put all my cards on the table. I like Rohit Sharma the captain. I have an enormous amount of respect for him for introducing to Indian cricket a risk attitude that was unpalatable to them even as recently as two years ago. I am also given to understand he is open towards a data-led understanding of his game, which is a rare quality for an India player, let alone captain, and for that I believe he has added value to the India side post-2021. I can only hope that the argument I am about to offer isn't influenced by my fondness for Rohit the leader, though I believe it probably isn't.

The selection of Harshit Rana is one of the decisions by the think-tank that, like with many things to do with Indian cricket, has triggered a strong response back home. Indeed, I was privately of the opinion that India ought to go with Akash Deep for the Adelaide Test due to two reasons. Firstly, variable bounce was unlikely to be the chief enemy of batters a second time. Moreover, the India pacers were likely in for a longer workout, and a bowler with 10 matches worth of first-class experience, who was visibly tiring towards the closing stages in Perth, would find the going tough. What I am about to undertake, however, is the tall task of explaining why cricket teams often make moves like this, where they expect that the combination which worked yesterday is likely to also work today.

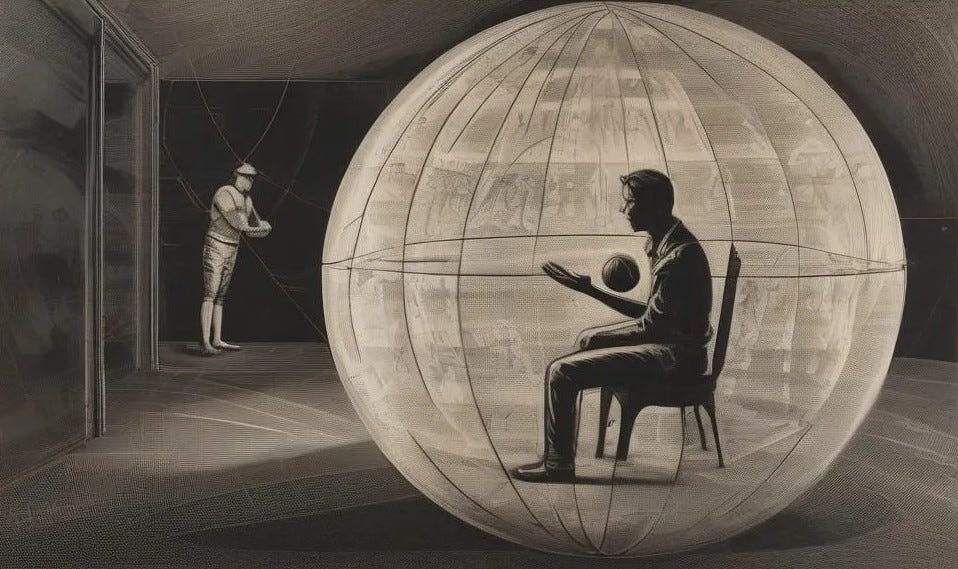

One of cricket's oldest adages is, "Never change a winning combination". Men as smart as MS Dhoni have sworn by this sentiment. Another form it often takes is via an argument for incumbency. But as a youth, this idea never quite made sense to me. Why would one not want to fix something that ain't broke, if by fixing it one may be able to improve on its current state? In this article I will argue that, much to the contrary, the notion that one mustn't meddle with things that work fine the way they are is an acknowledgment of the fact that one isn't completely capable of understanding why said thing works fine the way it is. In other words: adaptive expectations are a function of the algorithm generating outcomes being complex.

Put in words, my argument is simple enough. Think about an invisible algorithm churning out states of play on the cricket field. These states can be thought of as simply as one in which opening with KL Rahul and Yashasvi Jaiswal is optimal versus one in which opening with Rohit and Jaiswal is optimal. As this algorithm becomes complex, it becomes difficult to predict the optimal action. But say you know that the state of the world today is likely to be the state of the world tomorrow (a reasonable supposition because states generally do not change overnight). Given no good-quality information to update your prior, it makes sense to play the same strategy in a successive round if it brought you success in the preceding round.

The internal algorithm of sports is complex. To paraphrase what I said in a previous post, sport is the drama of life taken and multiplied manifold. If you don't understand the algorithm generating sporting outcomes, you don't understand what it is exactly that makes a combination tick. So, even though the proposition of improving yourself by tweaking a winning combination can be tempting, the possibility that you might wreck it by making the wrong move is non-negligible. The risk-averse cricketer may decide in such a circumstance that it is better not to mess with things he doesn't understand — because the probability that the state changes overnight is low. Essentially, you don't understand the algorithm, so might as well stick with what the algorithm has told you works.

This is basically my explanation for why sports teams prefer to stick with a winning combination. The reader who is satisfied by this simple argument should skip past the gobbledygook that is about to follow. Words are often enough communication, but I believe that formalism aids in providing clarity. So I want to extend a very simple model that standardizes my argument.

The set-up is as follows. Suppose there are multiple time periods t1k, t2k, t3k, and so on, where k is drawn from the set {0, 1}, and represents the state of the world in time ti. In each time period, the agent can choose an action from the set {θ1, θ2}. Represent these actions as θ1i, θ2i, θ3i, and so on, where again, i is drawn from the set {0, 1}. The agent receives positive utility from matching i and k - that is to represent matching his action with the state - and an equivalent negative utility from not matching i and k. Without loss of generality assume:

The state of the world today, kj, need not be the state of the world tomorrow. The state is unknown to the agent at the start of every time period, but after choosing his action in a period the agent receives a signal: g if k equals i and b if k does not equal i.1 (Think of g as something like the signal India received after opening the batting with Rahul in the Perth Test.) The timing of the game is as follows. In the first period, the agent plays an action and receives a signal. Based on the signal, the agent will decide his action in the next period, and so on.

Consider, first, the case of the simple algorithm. To keep things tractable, let’s tackle the simplest possible such algorithm, one in which the agent is able to predict the state of the world at a future time perfectly well. Suppose this state is k. The agent will simply match his action with the state by playing θ1k and be done with it. Essentially, given a simple algorithm, the agent achieves the best possible outcome.

Now consider the following complex algorithm. The state of the world in time period ti matches the state of the world in time period ti-1 with a known probability p.2 (The motivation behind p being known is that over time players are usually able to tell that the state doesn’t fluctuate furiously.) To state things more clearly, the complex algorithm takes the following form:

Now to analyze this problem. Consider the first time period. Suppose the agent plays θ1 and receives the signal g. He knows, then, that the state of the world in the first time period was k = 1. Given that he is aware of the algorithm in place, it is guaranteed by the nature of his utility function that the agent will want to play the same action in the second time period, as long as p is greater than or equal to ½. It follows that he will continue to play the same action as long as he keeps matching the signal, but once the signal isn’t matched in any round, he will immediately revert to playing the other action in the next period.

These are the two planks on which my argument rests: algorithmic complexity and conditional continuity. As long as the algorithm churning out states is complex, and players have reason to expect that the state today is likely to be the state tomorrow, it is sensible to play the same combination in a future time period provided it has worked in the present.

The model I have produced here is a simplified version of reality. There are portions of it that will doubtlessly taste sour to some readers. For instance, an implicit assumption that doesn’t hold true in the real world is that individual signals are strong enough to guide one’s response. But then again, simplification also achieves advantages — one of them being tractability. My guess is that the same conclusion can be reproduced in a set-up in which signals of heterogenous strengths are considered, or one in which the complexity of the algorithm is taken endogenously, but those would make for much more difficult and much less readable models.

Everything needed to go right for India to pocket victory in Perth. They won the toss and elected to bat first based on an incorrect rationale, and were bowled out just in time for Jasprit Bumrah and Co. to have an extended go at the Australians on both sides of stumps. It is possible that this led to a skewed perception of the quality of the likes of Rana, but the bottom line is that it is expected that combinations which emerged successful once have an internal logic explaining their success that is perhaps not visible to the naked eye. Oftentimes this crashes and burns, but it is for good reason that this assumption is baked into the tactical consciousness of cricket. The internal algorithm of cricket is complex.

A signal is not necessary for the argument to follow. Remember that the agent observes the utility he receives from his action and this serves as a signal of whether he has matched the state or not. I have included this concept nevertheless for it makes exposition easier.

Note that this algorithm achieves complexity only in a probabilistically predictable sense. In this way, it is not very complex at all. But the fact that the conclusion I draw follows even with a lower bound on complexity augurs well for the argument.

I think there's a typo in your second algorithm - the bottom half should read 1-k(j-1) with probability 1-p, right?

Nevertheless, still a fun perspective on selection. I enjoyed it. Thanks.